To deal with the “software crisis” in the 1980s, the US Air Force prompted the Software Engineering Institute (SEI) to develop a method for selecting software contractors. A study of past projects showed that they often failed for non-technical reasons such as poor configuration management, and poor process control.

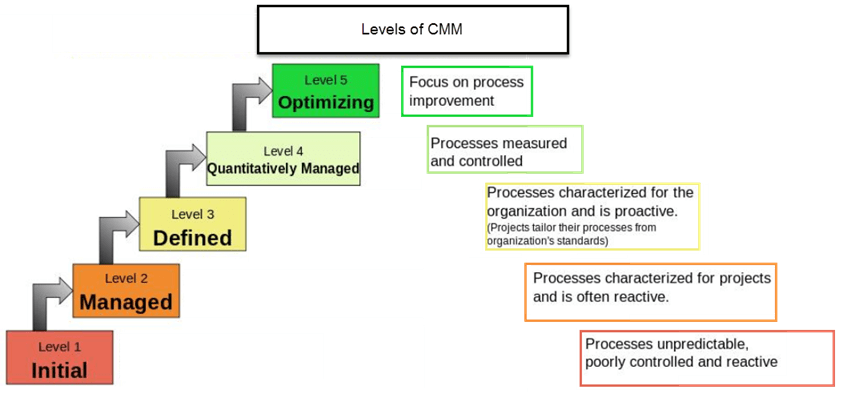

In essence, the SEI took the ideas of “software lifecycle” and along with the ideas of quality maturity levels, and developed the SW-CMM, or simply CMM, which rates a software organization’s maturity level. In CMM, maturity is measured on a scale of 1 to 5. Rather than enumerating and describing each level, suffice it to say that maturity ranges from level 1, or “initial,” which applies to the least mature organization (e.g. a start-up with two developers) up to level 5, “optimizing,” which applies to the most mature of organizations, whose processes are standardized, repeatable, predictable, and optimizing.

There is a set of Key Process Areas (KPAs) that an organization must address in order to rate at each level. Each KPA is met when the organization has a defined process for it, and is committed, and able to follow that process, all while measuring, analysing and verifying implementation of said process. For example, an organization must have defined practices for Software Configuration Management for a Level 2 or higher rating.

The implication here is that with a higher maturity levels comes a higher software process performance. From a management perspective, predictable software development means better performance on cost and productivity.

The CMM represents a good set of software “common sense”. One of the good things about it is that it guides an organization on what process areas to address, but does not dictate how this must be done. This sometimes leads organizations down a slippery slope of process overload, where an exorbitant amount of time and money is spent developing, maintaining, and deploying processes aimed complying with the CMM KPAs.

In 2002, the SEI’s Capability Maturity Model Integration (CMMI) replaced the CMM. The difference, at a high level, is that the CMMI added new “process areas” to the CMM (e.g. Measurement Analysis,) and better integrated other non-software development process in of product development (e.g. Systems Engineering activities.)

Practices added in CMMI models are improvements and enhancements to the SWCMM. Many of the new practices in CMMI models are already being implemented by organizations that have successfully implemented processes based on the improvement spirit of SW-CMM best practices (SEI 2002). For the purposes of this research we will use the terms “CMM” and “CMMI” interchangeably to mean “process improvement model that defines key processes for product development enterprises.”

As a developer I experienced the process overhaul leading to an initial assessment of a CMM level 3 in the late nineties, and later as a software development manager I saw our organization mature to a CMMI level 5. Today we have a ”Common Process Architecture,” a complex framework of process Work Instructions and measurement capabilities designed as our implementation of the CMMI.

The problem with “mature” organizations, perhaps, is that we become encumbered with the cost and effort of managing processes, collecting, analysing, and reporting on metrics (in fact the CMMI introduced a whole process area for Measurement and Analysis.) “Optimizing” means continuous improvement: so we do things like six sigma projects to improve and optimize processes. One must wonder whether the software community that the authors of the original CMM document purport to have come to “broad consensus” with really represents the whole of the software community, because we cannot find examples of large commercial software firms such as Google or Microsoft adopting CMMI or boasting high CMMI ratings.

Since 2002 the Défense Department has mandated that contractors responding to Requests for proposal (RFP) show that they have implemented CMMI practices. Other branches of the Federal Government also have begun to require a minimum CMMI maturity level. Often federal RFPs specify a minimum of CMMI level 3. It is therefore no surprise that the contractor community has embraced the CMM (and subsequently the CMMI). It is reported to have worked well for many large, multi-year programs with stable requirements, funding, and staffing.

Traditional criticisms of the CMM are that it imposes too much process on firms, making them less productive and efficient. It is said that only organizations of a certain scale can afford the resources required to control and manage all of the KPAs at higher maturity levels. But again, the CMM tells us what processes areas to address, not how to address them (although best practices are suggested.) Problems arise when firms design their processes ineffectively.

We feel the need to mention the CMM in this research because, in industry, the case is often presented as “Agile vs. CMM”. In fact, CMM is not at odds with Agile. Au contraire they could be very complementary. The CMMI version 1.3 was released in November of 2010, adding support for Agile. Process areas in the CMMI were annotated to explain how to interpret them in the context of agile practices. There are even several published examples of “Agile CMMI Success Stories”, for example the Collab Net project at a large investment banking firm4. Again, the CMM only guides an organization on what process areas to address, but does not dictate how this must be done. Organizations only get into trouble when they over-engineer their processes, making them so cumbersome and time consuming that, as a result, projects lose their potential for agility and responsiveness because they are burdened by the weight of their engineering processes.