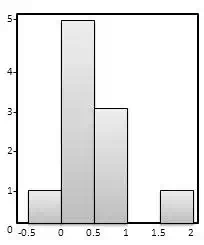

A histogram is a graphical representation of the distribution of numerical data. It is an estimate of the probability distribution of a continuous variable (quantitative variable).

Problem Statement:

Every month one measure the amount of weight one’s dog has picked up and get these outcomes:

| 0.5 | 0.5 | 0.3 | -0.2 | 1.6 | 0 | 0.1 | 0.1 | 0.6 | 0.4 |

Draw the histogram demonstrating how much is that dog developing.

Solution:

monthly development vary from -0.2 (the fox lost weight that month) to 1.6. Putting them in order from lowest to highest weight gain.

| -0.2 | 0 | 0.1 | 0.1 | 0.3 | 0.4 | 0.5 | 0.5 | 0.6 | 1.6 |

We decide to put the results into groups of 0.5:

· The -0.5 to just below 0 range.

· The 0 to just below 0.5 range, etc.

And here is the result:

There are no values from 1 to just below 1.5, but we still show the space.