In 2010, a new opportunity to employ agile development presented itself: the FAA was seeking to upgrade their terminal ATC systems’ data recording capabilities. The deployed systems were recording data on outdated storage media. Specifically, data was recorded onto magnetic tapes using DAT (Digital Archive Tape) drives, and the FAA wanted to upgrade the system and software to perform data recording onto RAID (Redundant Array of Independent Disks) storage devices, for many appropriate reasons such as reliability, redundancy, capacity, and performance. The effort was dubbed “CDR-R” for Continuous Data Recorder Replacement. The software changes required to make this transition were complex and non-trivial, requiring modifications to portions of the baseline code, some of which dated back to the 80s. Data recording is a critical component of the system – the FAA takes it very seriously, as they should, since this data is used not just for incident investigation purposes, but also to support data analysis of FAA operations.

The problem was that the scope of the changes that we estimated would be needed to implement the solution were such that they did not fit into the customer’s yearly budget and schedule… This seemed to me to be an opportunity to try a new delivery approach: First, inspired by Feature Driven Design (FDD,) I proposed that we divide the scope of changes into a set of ‘features’ such as ‘RAID recording’, ‘RAID synchronization’, ‘data transfer’, etc. Then, inspired by the spiral development model (described in section 3.3.2), I proposed an incremental delivery of the CDR-R changes in three phases: the first phase would provide basic RAID-based recording capabilities, the second would add additional monitoring and control capabilities for the RAIDs and provide data transfer tools to allow migration of data across various media and system installations, and finally the third phase would add some data maintenance functions. This new (in our program’s context) delivery model made the CDR-R transition more palatable for our customer and made for a better fit with their funding cycles, leading them to authorize the CDR-R development to begin.

For the first phase’s set of features, we employed our traditional waterfall approach: software requirements were developed to meet the system level requirements, and then a design to fulfill the software requirements, then code was developed to implement the design, then testing and integration. This phase completed with a software delivery containing the full scope of planned phase-1 modifications, however the performance of the development effort suffered from cost overruns and internal schedule slippage. A lot of rework was generated towards the end of the development phase, as we began to test the software and encountered complications when running in the target hardware environment. Several other problems required revisiting the requirements, the design, and underlying code implementation. In my opinion, at the root of these issues were two main root causes: Unclear/uncertain requirements, and a delayed ability to test the software.

These two issues have a compounding effect on each other: due to the waterfall approach of developing components in isolation of each other, the system functionality that we were trying to achieve only came to being at the tail-end of the development process, when all of the CSCIs (software components) were developed, tested in isolation, then integrated to produce the required functionality. Unfortunately, we typically only reach this point after 3/4ths or more of the schedule and budgeted effort have been consumed. This may not be problematic if requirements are well-defined, correct, complete, and unambiguous – but here we found this not to be the case. Identifying requirements issues that late in the development process meant several re-water-falling rework iterations of requirements/design/code at the very end of the development cycle when little time was left. Two key project performance measures, Schedule Performance Index (SPI) and Cost Performance Index (CPI), for this phase-1 of development were 0.82 SPI and 0.76 CPI, meaning that the software was behind schedule by 18%, and 24% over cost.

For the second phase, I proposed that we employ an Agile approach to development, based on the Scrum methodology (described later in this research.) Management was receptive to this proposition, and eager to support this effort, especially as the desire to “go agile” was being communicated top-down through the organization, exemplified by a corporate-backed initiative named “SWIFT” (Software Innovation for Tomorrow) that had begun piloting Agile practices on several other large programs in the company. Note that ours was not one of the SWIFT pilot programs, but an organic home-grown desire to employ Agile methods, with prior successes in AIG development and the PrOOD in mind.

A small team of developers was assembled, trained in Scrum methodology, and produced the phase-2 software increment in three sprints of three weeks each. This is relatively fast in an organization where conducting a code inspection alone can eat up a week in development time. Phase-2 thus enjoyed an SPI of 1.07 (7% ahead of schedule) yet the CPI of 0.73 remained less than ideal.

While the Scrum approach helped us beat schedule, our cost performance did not improve. Looking back, there are several factors that could explain this: The team was new to Scrum, required training sessions, and only had the chance to execute three sprints. We did not have a product owner or customer representatives involved so the requirements were still unclear and conflicting. A new web-based code review tool was deployed during our second sprint, but in retrospect was not optimally used and ended up causing wasted effort. We also had to deal with a slew of defects and issues from the phase-1 delivery; in other words we were building on top of a baseline that still had undiscovered defects. Finally, there was the overhead of trying to fit the agile software development model within an overarching heavyweight Integrated Product Development Process (IPDP) covering all of the phases of program execution.

Personal Reflections

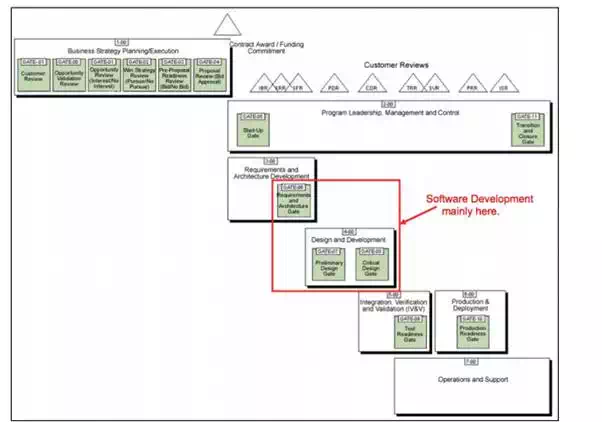

It is important to note that the activity of “software development” fits within an integrated approach to product development that includes program processes such as business planning and requirements analysis, as shown in Figure 1.

Figure 1 – Example Integrated Product Development Process

Part of the problem with adopting Scrum within an environment such as shown in Figure 1 was that, as a Software Development Manager, only software development activities were within my purview, and thus only the software development team could adopt Scrum. Other parts of the engineering organization, such as System Engineering (SEs, producers of software requirements) and Software Integration and Test (SIs, integrators and testers of the system) were still operating in a traditional approach, where SEs hand off completed requirements to development, who in turn hand off completed code to SIs.

Since Scrum was adopted just within the confines of software development, and not as a complete IPDP overhaul, this meant that the development team had to maintain the same “interfaces” to the other activities in the program, and had to produce the same work artifacts (e.g. design documentation, review packages), employ the same level of process rigor– all of which was monitored by the Software Quality Assurance (SQA) oversight group. This included holding all of the required inspections and feeding back progress to management, which expected clear Earned Value (EV) based reports of progress.

Yet with all of this, Scrum seemed to energize the development team, produce working code much faster, and speed up the experience gain of new engineers. Clearly there was some benefit to Agile. As a result I decided to pursue this research on Agile development for my graduate thesis in the M.I.T. Systems Design & Management (SDM) program. The research would take a “deep dive” into Agile, and take a close look at agile practices with the goal of understanding how to integrate them in a CMMI level 5 software engineering environment. During my time at SDM I also discovered the worlds of Systems Thinking and Systems Dynamics (SD), and found the SD approach to be the best tool for understanding the emergent behaviour of a ‘complex socio-technical system’, in this case the software development enterprise.