Large-scale software engineering organizations, particularly government contractors, have traditionally used plan-driven, heavyweight, waterfall-style approaches to the planning, execution, and monitoring of large software development efforts. This approach is rooted in ideas stemming from statistical product quality work such as that of W. Edward Deming and Joseph Juran.

The general idea is that organizations can improve performance by measuring the quality of the product development process, and using that information to control and improve process performance. The Capability Maturity Model (CMM) and other statistical/quality–inspired approaches such as Six Sigma and ISO 9000 follow this idea. As a result, the collection and analysis of process data becomes a key part of the product development process (Raynus 1998).

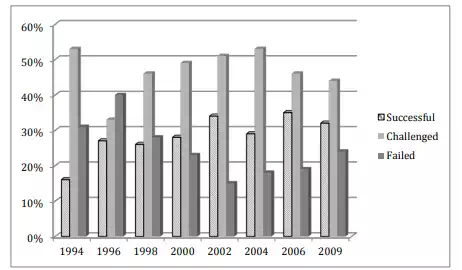

Nevertheless, these types of “big” software projects have been susceptible to failure in terms of cost, schedule, and/or quality. The Standish group every few years produces the “CHAOS Report,” one of the software industry’s most widely-cited reports showcasing software project success and failure rates. It measures success by looking at the “Iron Triangle” of project performance: schedule, cost, and scope (whether or not the required features and functions were delivered.) Roughly, only about a third of software projects are considered to have been successful over the last two decades (see Figure 2) In April 2012, the worldwide cost of IT failures was conservatively calculated to be around $3 trillion (Krigsman 2012).

It is with this in mind that the software industry often refers to “the software crisis.” A whole business ecosystem has evolved around the software industry’s need to address the software crisis, including: –

· Project management approaches to controlling schedule, cost, and to building more effective teams.

· Engineering approaches to architecture and design to produce higher quality (low defect, more flexible, more resilient, scalable, modifiable, etc.) software.

· Processes and methodologies for increasing productivity, predictability, and efficiency of software development teams.

· Tools and environments to detect and prevent defects, improve design quality, automate portions of the development workflow, and facilitate team knowledge.

Let us briefly explore some of these in the context of government software systems development.

Software Project Management

The Iron Triangle

Like any human undertaking, projects need to be performed and delivered under certain constraints. Traditionally, these constraints have been listed as “scope,” “time,” and “cost” (Chatfield & Johnson 2007). Note that “scope” in this context refers to the set of required features in the software, as well as the level of quality of these features.

These three constraints are referred to as the “Iron Triangle” (Figure 3) of project management – This is a useful paradigm for tracking project performance: In an ideal world, a software project succeeds when it delivers the full scope of functionality, on schedule as planned, and within budget. However, in the real-world projects suffer from delays, cost overruns, and scope churn. Expressions such as “scope churn” and “scope creep” refer to changes in the project’s initial scope (often captured contractually in the form of requirements). A project with many Change Requests (CRs) is said to experience high scope churn. In order to deliver, project managers must adjust project goals by choosing which sides of the constraints to relax. When a project encounters performance problems, the manager’s choices are to pull one of the three levers of the iron triangle:

· Increase effort (and thus cost) by authorizing overtime or hiring more staff.

· Relax the schedule by delaying delivery or milestones.

· Cut scope by deferring a subset of features to future software releases, or reduce the quality of the features delivered.

As will be shown later in this research, taking any one of these actions can result in negative and unforeseen side-effects, making things even worse. In section 4.3, we will take a close look at “Brooks’ Law” which states that “adding manpower to a late software project makes it later”.